Protein Integrity Verification by Mass Spectrometry: A Comprehensive Guide for Robust Biomolecular Analysis

This article provides a comprehensive guide to mass spectrometry (MS) methods for verifying protein integrity, a critical step in biomedical research and drug development.

Protein Integrity Verification by Mass Spectrometry: A Comprehensive Guide for Robust Biomolecular Analysis

Abstract

This article provides a comprehensive guide to mass spectrometry (MS) methods for verifying protein integrity, a critical step in biomedical research and drug development. It explores the foundational principles of protein integrity and the challenges posed by degradation and dynamic range. The scope extends to detailed methodological workflows, from sample preparation to advanced LC-MS/MS and data-independent acquisition, highlighting applications in biopharmaceuticals and interactome studies. It further addresses key troubleshooting strategies for common issues like batch effects and missing data, and offers a comparative analysis of software platforms and validation techniques. Designed for researchers and scientists, this resource aims to equip professionals with the knowledge to implement robust, reproducible proteomic verification in their workflows.

The Pillars of Protein Integrity: Defining, Challenging, and Quantifying Biomolecular Stability

In the development of biopharmaceuticals, protein integrity is a critical quality attribute that extends far beyond simple purity. It encompasses the structural, conformational, and functional state of a protein, ensuring it maintains its native conformation and biological activity throughout manufacturing, formulation, and storage. While purity analysis confirms the absence of contaminants, integrity verification confirms the protein itself remains correctly folded, non-aggregated, and functionally competent. Mass spectrometry (MS) has emerged as a powerful analytical platform that provides comprehensive insights into all aspects of protein integrity, from primary structure to higher-order conformations, enabling researchers to ensure product safety, efficacy, and stability [1] [2].

This Application Note details integrated MS-based protocols for the multi-level assessment of protein integrity, providing researchers in drug development with robust methodologies for characterizing therapeutic proteins.

The Multi-Faceted Nature of Protein Integrity

Protein integrity is a multi-dimensional attribute. Conformational stability refers to the maintenance of the secondary, tertiary, and quaternary structure under various environmental stresses. Functional integrity is the retention of biological activity, which is directly dependent on the native three-dimensional structure [3]. Finally, compositional integrity includes the accurate primary sequence and appropriate post-translational modifications (PTMs).

The relationship between structure, stability, and function was clearly demonstrated in a study of lysozyme, DNase I, and lactate dehydrogenase (LDH). Using High Sensitivity Differential Scanning Calorimetry (HSDSC) and FT-Raman spectroscopy, researchers showed that the ability of lysozyme to refold after thermal denaturation was directly linked to the retention of its native structure and enzymatic activity. In contrast, the irreversible denaturation of DNase I and LDH led to a complete loss of function, underscoring the critical link between structural preservation and activity [3].

Table 1: Key Aspects of Protein Integrity and Their Impact

| Aspect of Integrity | Description | Consequence of Loss |

|---|---|---|

| Conformational Integrity | Preservation of secondary, tertiary, and quaternary structure. | Loss of biological function; potential for increased immunogenicity. |

| Functional Integrity | Retention of specific biological or enzymatic activity. | Reduced drug efficacy. |

| Compositional Integrity | Correct primary amino acid sequence and desired PTMs. | Altered pharmacokinetics, efficacy, and stability. |

| Aggregation State | Absence of undesirable higher-order aggregates or fragments. | Product instability, reduced efficacy, and potential safety issues. |

Mass Spectrometry Methods for Structural Integrity Assessment

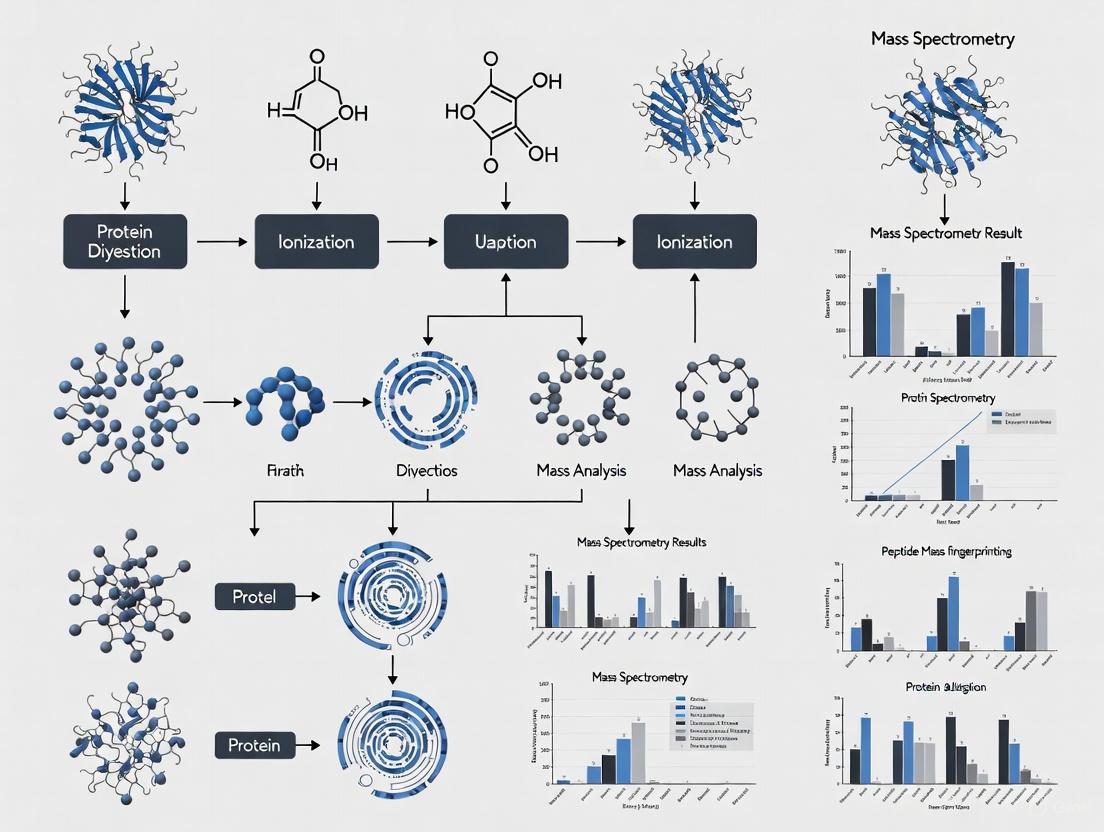

Mass spectrometry techniques provide unparalleled detail on protein structure and dynamics. The following workflow illustrates the pathway for MS-based integrity verification, from sample analysis to data interpretation.

Top-Down and Bottom-Up MS for Primary Structure Analysis

Bottom-up proteomics, the most established approach, involves enzymatic digestion of proteins into peptides followed by LC-MS/MS analysis. It excels at identifying proteins, sequencing peptides, quantifying abundance, and locating PTMs [4] [5]. Top-down proteomics, an advancing methodology, analyzes intact proteins, providing a complete picture of proteoforms, including combinations of PTMs present on a single molecule [4].

Table 2: Mass Spectrometry Techniques for Protein Integrity Analysis

| Technique | Primary Application | Key Strengths | Common Instrumentation |

|---|---|---|---|

| Bottom-Up LC-MS/MS | Sequence confirmation, PTM mapping, quantification. | High sensitivity and robust identification. | Orbitrap platforms (e.g., Exploris, Astral), timsTOF [5]. |

| Top-Down MS | Intact mass analysis, proteoform characterization. | Preserves labile PTM information and protein stoichiometry. | High-resolution systems like Orbitrap Excedion Pro, timsTOF [4]. |

| Targeted (PRM/MRM) | High-precision quantification of specific targets (e.g., impurities). | High sensitivity, accuracy, and multiplexing capability. | Triple quadrupole, Q Exactive HF-X, Orbitrap platforms [6] [5]. |

| Hydrogen-Deuterium Exchange (HDX-MS) | Conformational dynamics, epitope mapping, stability. | Probes solvent accessibility and protein folding. | Coupled with high-resolution MS systems [7]. |

| Size Exclusion Chromatography MS (SEC-MS) | Analysis of size variants and aggregates. | Simultaneously separates and identifies oligomeric states. | LC systems coupled to MS detectors [1]. |

Protocol: Primary Structure and PTM Analysis via Bottom-Up LC-MS/MS

This protocol is adapted from an integrated workflow for plasma proteome analysis [6] and can be applied to most recombinant protein samples.

Sample Preparation (Timing: ~2 hours)

- Denaturation and Reduction: Dilute the protein sample in a buffer containing 6 M urea. Add tris(2-carboxyethyl)phosphine (TCEP) to a final concentration of 20 mM and incubate at 37°C for 60 minutes to reduce disulfide bonds [6].

- Alkylation: Add iodoacetamide (IAA) to a final concentration of 40 mM. Incubate at room temperature in the dark for 30 minutes to alkylate cysteine residues [6].

Enzymatic Digestion (Timing: 6-8 hours)

- Dilute the urea concentration to below 1 M using 50 mM ammonium bicarbonate.

- Add trypsin at an enzyme-to-substrate ratio of 1:50 (w/w). Incubate at 37°C with shaking (500 rpm) for 6-8 hours.

- Stop the digestion by adding formic acid to a final concentration of 1% [6].

Desalting (Timing: ~1 hour)

- Prepare a C18 desalting column (e.g., using StageTips).

- Activate the column with methanol and acetonitrile, then equilibrate with 0.1% formic acid (Solvent A).

- Load the acidified peptide mixture onto the column. Wash with Solvent A to remove salts.

- Elute peptides with 40-60% acetonitrile in 0.1% formic acid. Concentrate the eluate in a vacuum centrifuge [6].

LC-MS/MS Analysis and Data Processing

- Reconstitute desalted peptides in Solvent A and separate using a nano-flow LC system with a C18 column and a gradient of increasing acetonitrile.

- Acquire data on a high-resolution mass spectrometer (e.g., Orbitrap Astral, timsTOF) using data-dependent acquisition (DDA) or data-independent acquisition (DIA).

- Process raw data using software (e.g., MaxQuant, Spectronaut) to search against a protein sequence database for identification and quantification [6] [5].

Assessing Conformational and Functional Integrity

Biophysical Techniques Correlated with MS

While MS is powerful, a full integrity profile requires orthogonal techniques. Fourier-Transform Infrared (FTIR) and Raman Spectroscopy are highly sensitive to changes in protein secondary structure by monitoring the amide I band (~1650 cm⁻¹) [7]. Differential Scanning Calorimetry (DSC) directly measures thermal stability by determining the melting temperature (Tm) and enthalpy (ΔH) of protein unfolding [1] [3]. Dynamic Light Scattering (DLS) analyzes hydrodynamic radius and is used to sensitively detect small quantities of protein aggregates [1].

Protocol: Conformational Stability Analysis via HDX-MS

Hydrogen-Deuterium Exchange coupled to MS (HDX-MS) is a powerful label-free technique for probing protein conformation and dynamics.

Deuterium Labeling (Timing: Variable)

- Dilute the purified protein into a deuterated buffer (e.g., D2-based PBS, pD 7.0). The dilution factor and protein concentration must be optimized to minimize back-exchange.

- Incubate for various time points (e.g., 10 seconds, 1 minute, 10 minutes, 1 hour) at a controlled temperature (e.g., 25°C) to allow deuterium incorporation.

Quenching and Digestion (Timing: < 2 minutes)

- At each time point, withdraw an aliquot and mix with a quench solution (e.g., low pH, low temperature) to reduce the pH to ~2.5 and the temperature to ~0°C. This drastically slows down the exchange reaction.

- Immediately inject the quenched sample onto a cooled, immobilized pepsin column for online digestion (typically < 1 minute).

LC-MS Analysis and Data Processing

- Separate the resulting peptides using a short, steep UPLC gradient under quench conditions to minimize back-exchange.

- Acquire mass spectra on a high-resolution mass spectrometer.

- Process data using dedicated HDX-MS software to identify peptides and calculate deuterium uptake for each peptide at each time point. A change in deuterium uptake under different conditions (e.g., with ligand, after stress) indicates a conformational change.

Application: Monitoring Host Cell Proteins as Integrity Indicators

Residual Host Cell Proteins are process-related impurities that can co-purify with biopharmaceuticals, posing a risk to drug stability and patient safety. MS is uniquely capable of identifying and quantifying individual HCPs, complementing traditional immunoassays [2].

MS Workflow for HCP Monitoring:

- Sample Preparation: Digest the drug substance or process intermediate. Depletion of the therapeutic protein can be performed to enrich for low-abundance HCPs.

- Data Acquisition: Use high-sensitivity DIA or label-free DDA on instruments like the Orbitrap Astral to achieve the depth of coverage needed to detect low-level HCPs [2].

- Data Analysis: Search data against a database of the host organism's proteome. Software tools and artificial intelligence are increasingly used to improve the reliability of HCP identification and to prioritize HCPs based on risk (e.g., enzymatic activity, immunogenicity) [2].

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 3: Key Research Reagent Solutions for Protein Integrity Analysis

| Item | Function/Application | Example/Critical Feature |

|---|---|---|

| Trypsin, MS Grade | Proteolytic digestion for bottom-up proteomics. | High purity to minimize autolysis; modified trypsin to prevent self-cleavage. |

| Urea & IAA | Protein denaturation and cysteine alkylation. | High-purity, fresh urea solutions to avoid cyanate formation that causes artifactual modifications. |

| TCEP | Reduction of disulfide bonds. | Odorless alternative to DTT; stable at room temperature. |

| C18 StageTips | Desalting and cleanup of peptide mixtures. | In-house packed tips for low-cost, high-recovery sample preparation [6]. |

| HDX Buffers | Deuterated buffers for hydrogen-deuterium exchange. | High D-content; precise pD adjustment. |

| Somalogic SomaScan | Affinity-based proteomics platform. | Used for large-scale studies of the circulating proteome; useful for biomarker discovery [8]. |

| Olink Explore HT | Multiplexed, proximity extension assay platform. | Used in large-scale proteomics projects like the UK Biobank [8]. |

| Standard BioTools | Provider of the SomaScan platform. | Enables analysis of thousands of proteins simultaneously [8]. |

A multi-parametric approach is essential for defining protein integrity in modern biopharmaceutical development. By integrating mass spectrometry—spanning top-down, bottom-up, and targeted strategies—with orthogonal biophysical techniques, researchers can build a comprehensive integrity profile that directly links structural conformation to biological function. The protocols and applications detailed herein provide a framework for implementing these powerful MS-based methods to ensure the quality, safety, and efficacy of protein therapeutics.

In mass spectrometry (MS)-based proteomics, the "dynamic range" refers to the span between the most abundant and least abundant proteins in a sample. This presents a central challenge for protein integrity verification research and drug development: high-abundance proteins can suppress the detection and quantification of low-abundance species, many of which are biologically significant targets or potential biomarkers [9] [10] [11]. In complex biological samples like plasma, this dynamic range can exceed 10 orders of magnitude, with 22 proteins constituting about 99% of the protein mass, while the remaining 1% comprises thousands of distinct lower-abundance proteins [12]. This imbalance means that without specialized techniques, the ion signals of low-abundance peptides are often drowned out during ionization, making them invisible to the mass spectrometer [10]. Overcoming this limitation is critical for obtaining a comprehensive view of the proteome, enabling the discovery of novel biomarkers, and advancing precision medicine.

Technological and Methodological Solutions

Researchers have developed a suite of strategies to compress the dynamic range of protein abundances, making low-abundance proteins accessible to MS analysis. The following table summarizes the core approaches.

Table 1: Core Methodologies for Overcoming Dynamic Range Challenges in Proteomics

| Method Category | Key Principle | Key Advantage | Quantitative Performance |

|---|---|---|---|

| Sample Pre-fractionation & Enrichment | Depletes highly abundant proteins or enriches low-abundance targets prior to digestion [10] [11]. | Directly reduces sample complexity and compresses dynamic range. | Improves sensitivity but requires careful validation to avoid co-depletion of targets [13]. |

| Bead-Based Enrichment | Uses paramagnetic beads with specific binders to isolate and concentrate low-abundance proteins from complex samples [11]. | Highly specific; can be automated for high-throughput applications. | The ENRICH-iST kit reports low coefficients of variation (CVs) and high reproducibility [11]. |

| Nanoparticle Protein Corona (Proteograph) | Uses engineered nanoparticles to bind proteins, compressing dynamic range via competitive binding at the nanoparticle surface [12]. | Unbiased, scalable, and enables deep plasma proteome coverage. | Identified >7,000 plasma proteins; maintains fold change accuracy and precision across batches [12]. |

| Advanced MS Acquisition Modes | Multiplexes precursor ions into different m/z range packets during instrument transients [9]. | Maximizes instrument usage without increasing measurement time or cost. | Increases protein identifications by 9% (DDA) and 4% (DIA), while reducing quantitative CV by >50% [9]. |

| Computational Protein Inference | Leverages peptides shared across multiple proteins for quantification using combinatorial optimization [14]. | Allows quantification of proteins that lack unique peptides. | Enables relative abundance calculations for proteins previously discarded from analysis [14]. |

Detailed Experimental Protocols

Protocol: Bead-Based Enrichment for Low-Abundance Proteins

This protocol, based on commercially available kits like the ENRICH-iST, is designed for processing plasma or serum samples to enhance the detection of low-abundance proteins [11].

1. Binding: Incubate the plasma sample with coated paramagnetic beads. The beads are functionalized with specific binders that selectively capture target proteins or a broad range of low-abundance proteins via affinity interactions. 2. Washing: Apply a magnetic field to separate the beads from the solution. Wash the beads thoroughly to remove non-specifically bound contaminants and highly abundant proteins. 3. Lysis and Denaturation: Resuspend the beads in a LYSE reagent to denature the captured proteins. Incubate in a thermal shaker (typically at ~95°C for 10 minutes) to irreversibly break disulfide bonds and fully linearize the proteins. 4. Digestion: Digest the proteins into peptides directly on the beads. Add a proteolytic enzyme (typically trypsin) and incubate under optimized conditions (e.g., 37°C for several hours) for complete digestion. 5. Peptide Purification: Clean up the digested peptides using solid-phase extraction (SPE) to remove salts, detergents, and other impurities that could interfere with downstream LC-MS analysis. 6. MS Analysis: Reconstitute the purified peptides in an appropriate solvent (e.g., 0.1% formic acid, 3% acetonitrile) for injection into the LC-MS/MS system [11].

This entire workflow can be completed in approximately 5 hours and is amenable to automation for processing large sample cohorts [11].

Protocol: Nanoparticle Protein Corona Workflow (Proteograph)

The Proteograph Product Suite employs a multiplexed nanoparticle workflow to compress the dynamic range of plasma proteomes, enabling deep coverage [12].

1. Sample Incubation: Incubate the plasma sample with the proprietary engineered nanoparticles. During incubation, a protein "corona" forms on the nanoparticle surface through competitive binding, where low-abundance proteins with high affinity can displace high-abundance proteins with lower affinity. 2. Corona Isolation and Washing: Separate the nanoparticles with their bound protein corona from the bulk solution, followed by washing steps to remove unbound or weakly associated proteins. 3. Protein Elution and Digestion: Elute the proteins from the nanoparticle corona. Subsequently, denature, reduce, and alkylate the proteins following standard protocols (e.g., using TCEP or DTT for reduction and iodoacetamide for alkylation). Digest the protein mixture into peptides using trypsin. 4. Peptide Clean-up: Desalt and concentrate the resulting peptides using StageTips or SPE plates to ensure compatibility with LC-MS. 5. LC-MS Analysis: Analyze the peptides using a high-performance LC-MS system, typically with a Data-Independent Acquisition (DIA) method like on an Orbitrap Astral mass spectrometer. The data is processed using specialized software (e.g., DIA-NN in library-free mode) for protein identification and quantification [12].

Protocol: Multiple Accumulation Precursor Mass Spectrometry (MAP-MS)

MAP-MS is an instrumental method that enhances dynamic range by using otherwise "wasted" instrument time [9].

1. Instrument Setup: Implement the method on a trapping instrument like an Orbitrap Exploris 480 coupled to an EASY-nLC 1200 and a UHPLC column (e.g., Aurora Ultimate XT 25×75 C18). 2. Accumulation and Multiplexing: During the long transient recording times of the Orbitrap, multiplex precursor ions by accumulating them into several distinct m/z range packets simultaneously, rather than scanning a single broad range. 3. Data Acquisition: Perform this in either Data-Dependent Acquisition (DDA) or Data-Independent Acquisition (DIA) mode. The approach efficiently utilizes the instrument's dynamic range capacity by preventing the detector from being saturated by a few high-abundance ions. 4. Data Analysis: Process the resulting spectra with standard proteomics software suites. The output demonstrates increased protein identifications and improved quantitative precision compared to standard methods [9].

Workflow Visualization

The following diagram illustrates the logical progression of decisions and methodologies for tackling the dynamic range challenge, from sample preparation to data analysis.

The Scientist's Toolkit: Essential Research Reagents & Materials

Successful navigation of the dynamic range challenge relies on a suite of specialized reagents and materials. The following table details key solutions for robust and reproducible results.

Table 2: Key Research Reagent Solutions for Dynamic Range Challenges

| Item | Function & Application | Specific Examples |

|---|---|---|

| Paramagnetic Bead Kits | Selective isolation and concentration of low-abundance proteins from complex samples like plasma/serum; ideal for targeted studies [11]. | ENRICH-iST Kit [11] |

| Nanoparticle Kits | Unbiased dynamic range compression for deep, discovery-phase profiling of biofluids; suited for large-scale cohort studies [12]. | Proteograph XT Assay Kit [12] |

| Lysis Buffers & Inhibitors | Reagent-based cell lysis and solubilization of proteins while inhibiting endogenous proteases/phosphatases to preserve sample integrity [10]. | Custom buffers with protease/phosphatase inhibitors [10] |

| Protein Assays | Accurate quantification of protein concentration after lysis to ensure consistent loading across experiments and control for yield [10]. | Pierce Protein Assays (commercial examples) [10] |

| Digestion Enzymes | High-purity, specific proteases (e.g., trypsin) for complete and reproducible digestion of proteins into peptides for bottom-up MS [10]. | Trypsin, Lys-C [10] |

| Desalting & Clean-up Kits | Removal of salts, detergents, and other interfering substances from peptide digests prior to LC-MS to prevent ion suppression [10] [11]. | Solid-Phase Extraction (SPE) tips, StageTips [10] |

| Stable Isotope Labels | Metabolic (SILAC) or chemical (iTRAQ, TMT) incorporation of tags for precise multiplexed relative quantification across samples [13]. | SILAC, iTRAQ, TMT reagents [13] |

In mass spectrometry imaging (MSI) and quantitative proteomics, data integrity is paramount. The journey from sample preparation to final data visualization is fraught with potential sources of degradation that can compromise analytical results. Protein modifications and data fragmentation introduce significant artifacts that distort biological interpretations, particularly in protein integrity verification research crucial for drug development.

The selection of analytical color schemes represents a frequently overlooked yet critical point of potential data degradation. While rainbow-based colormaps like "jet" remain popular for their visual appeal, they introduce well-documented artifacts that can actively mislead data interpretation [15] [16]. These colormaps are not perceptually uniform, meaning equal changes in data value do not produce equal changes in perceived color intensity. Furthermore, their use of multiple hues makes accurate interpretation challenging for the approximately 8% of males of European descent with color vision deficiencies (CVDs) [16]. This degradation in data representation directly impacts the reliability of protein verification studies, potentially leading to false conclusions in therapeutic protein characterization.

Mechanisms of Data Degradation

Perceptual Distortion from Non-Linear Colormaps

The use of non-perceptually uniform colormaps creates a form of systematic data degradation by distorting the visual representation of quantitative information. In the jet colormap, the perceptual distance for the same quantitative change in signal (e.g., 1.39) can vary significantly (e.g., from 47.5 to 57.0) depending on where it occurs in the data range [16]. This non-linear relationship means that data gradients appear artificially steep in some regions and flattened in others, misleading researchers about the true distribution of protein abundances in MSI heatmaps.

The human visual system is naturally drawn to areas of high luminance and specific hues, particularly yellow. Rainbow colormaps exploit this by placing bright yellow in the middle of their data range, arbitrarily drawing the viewer's attention to medium-intensity values rather than the highest data values [16]. This attentional bias can cause researchers to overlook genuinely high-abundance regions in protein distribution maps, potentially missing critical biomarkers or localization patterns in drug target verification.

Exclusionary Data Visualization Through Color Inaccessibility

The degradation extends beyond mere misrepresentation to active exclusion of researchers with color vision deficiencies (CVDs). Approximately 8% of males of European descent and <1% of females have some form of CVD [16]. The red-green confusion characteristic of the most common forms of CVD (protanopia and deuteranopia) renders many rainbow-based visualizations quantitatively useless for these individuals. This represents not just an accessibility oversight but a fundamental degradation of data communicability across the scientific community.

When MSI data is visualized using problematic colormaps, the resulting heatmaps become scientifically ambiguous for a significant portion of researchers. For example, a protein distribution that appears as a clear gradient to those with normal color vision may show virtually no perceptible variation to someone with deuteranopia [16]. This fragmentation in data interpretation compromises collaborative research efforts and reduces the reproducible value of published findings in protein verification studies.

Analytical Fragmentation in Quantitative Workflows

The degradation pathway extends to methodological fragmentation in quantitative proteomics. In meat authentication studies, traditional peptide targeting strategies require labor-intensive, database-dependent identification of species-specific peptides followed by recovery rate validation [17]. This approach creates workflow inefficiencies where researchers must perform uniqueness queries peptide-by-peptide against entire databases, a process described as "labor-intensive and inefficient" [17].

This fragmentation in analytical methodology introduces validation bottlenecks that delay verification of protein integrity. Without streamlined processes for marker validation, the critical path from raw data to quantitative conclusion becomes fragmented, increasing the risk of analytical errors propagating through to final results. The absence of standardized, efficient workflows represents a systemic vulnerability in protein verification methodologies used throughout drug development pipelines.

Quantitative Assessment of Visualization Artifacts

Table 1: Comparative Performance of Colormaps in MSI Data Representation

| Colormap Type | Perceptual Uniformity | CVD Accessibility | Quantitative Accuracy | Recommended Use |

|---|---|---|---|---|

| Jet (Rainbow) | Poor - introduces artificial boundaries | Problematic for 8% of population | Low - misleading intensity perception | Not recommended |

| Hot | Moderate - linear RGB but not perceptually uniform | Moderate - some differentiation issues | Moderate - better than rainbow | Acceptable alternative |

| Greyscale | High - perceptually linear gradient | High - no hue dependency | High - intuitive intensity mapping | Recommended for accuracy |

| Cividis | High - scientifically derived | High - optimized for CVDs | High - uniform perceptual distance | Recommended for publication |

Table 2: Impact of Colormap Selection on Data Interpretation Accuracy

| Interpretation Parameter | Rainbow Colormaps | Perceptually Uniform Colormaps |

|---|---|---|

| Identification of maximum values | Arbitrarily drawn to yellow, not highest values | Correctly identifies highest intensity regions |

| Perception of data gradients | Inconsistent - varies by data range | Consistent across full data range |

| Accessibility for CVD researchers | Severely compromised | Fully accessible |

| Quantitative comparison between regions | Difficult due to hue variation | Intuitive due to luminance scaling |

| Reproducibility across publications | Low - subjective interpretation | High - objective interpretation |

The quantitative superiority of perceptually uniform colormaps is demonstrated through perceptual distance measurements. For the same quantitative difference in normalized glutathione abundance (approximately 1.3-1.4), the cividis colormap provides nearly identical perceptual distances (24.8 and 25.9), while the jet colormap shows widely varying perceptual distances (47.5 and 57.0) for the same actual data differences [16]. This perceptual inconsistency directly compromises the quantitative integrity of MSI data visualization.

Experimental Protocols for Optimal Data Visualization

Protocol: Validating Perceptual Uniformity in MSI Heatmaps

Principle: Ensure colormap selection accurately represents quantitative protein abundance data without perceptual distortion.

Materials:

- MSI data set (e.g., protein distribution heatmap)

- Multiple colormaps (jet, hot, greyscale, cividis)

- Kovesi test image transformation algorithm

- Perceptual distance calculation utility (e.g., cmaputil module)

Procedure:

- Generate protein distribution heatmap using default laboratory colormap

- Apply Kovesi test by transforming colormap color values with sine function

- Identify regions where sine wave is indistinguishable, indicating nonlinear color gradients

- Calculate perceptual distances between data points with known quantitative differences

- Compare perceptual distance consistency across data range

- Select colormap with most uniform perceptual gradient

- Verify accessibility using CVD simulation software (e.g., Dalton Lens)

Validation: A colormap passes validation when perceptual distance between points with equal quantitative differences varies by less than 10% across the data range [16].

Protocol: Hierarchical Clustering-Driven Peptide Screening for Quantitative Analysis

Principle: Streamline identification of species-specific peptide markers while excluding non-informative signals to prevent analytical fragmentation.

Materials:

- Meat samples (pork, beef)

- Extraction solution (Tris-HCl 0.05 M, urea 7 M, thiourea 2 M, pH 8.0)

- Trypsin, dithiothreitol (DTT), iodoacetamide (IAA)

- C18 solid-phase extraction columns

- UPLC system with Hypersil GOLD C18 column (2.1 mm × 150 mm, 1.9 µm)

- Q Exactive HF-X mass spectrometer

Procedure:

- Sample Preparation: Homogenize 2g meat sample with 20mL pre-cooled extraction solution in ice-water bath

- Centrifugation: Spin at 12,000 rpm for 20 minutes at 4°C

- Digestion:

- Aliquot 200μL supernatant

- Reduce with 30μL 0.1M DTT at 56°C for 60 minutes

- Alkylate with 30μL 0.1M IAA in dark at room temperature for 30 minutes

- Dilute with 1.8mL Tris-HCl buffer (25 mM, pH 8.0)

- Digest with 60μL trypsin (1.0 mg/mL) at 37°C overnight

- Terminate with 15μL formic acid

- Purification:

- Activate C18 SPE column with methanol

- Equilibrate with 0.5% acetic acid

- Load sample, wash with 0.5% acetic acid

- Elute with 2mL ACN/0.5% acetic acid (60/40, v/v)

- Filter through 0.22μm membrane

- HRMS Analysis:

- Employ Full Scan-ddMS2 mode on Q Exactive HF-X

- Use gradient elution: 0.0-0.2min (97-90% A), 0.2-16.0min (90-60% A), 16.0-17.0min (60-20% A), 17.0-17.5min (20% A), 17.5-18.5min (20-97% A), 18.5-25.0min (97% A)

- Mobile phase A: 0.1% FA in water; B: 0.1% FA in ACN

- Flow rate: 0.2mL/min, column temperature: 40°C

- Data Processing:

- Apply hierarchical clustering analysis (HCA) to peptide signals

- Implement positive correlation-based pre-screening

- Verify species-specificity of candidate peptides

- Validate quantitative suitability through recovery rate testing

Validation: The protocol achieves 80% elimination of non-informative peptide signals while maintaining accurate quantification with recoveries of 78-128% and RSD <12% [17].

Pathway Diagram: Data Degradation in Mass Spectrometry

Data Degradation Pathway in MS: This pathway illustrates how multiple degradation sources compromise data integrity throughout the mass spectrometry workflow, leading to erroneous scientific conclusions.

Pathway Diagram: Solution Framework for Data Integrity

Solution Framework for Data Integrity: This framework identifies key mitigation strategies that collectively preserve data integrity throughout mass spectrometry-based protein verification workflows.

Research Reagent Solutions for Protein Integrity Studies

Table 3: Essential Research Reagents for Protein Degradation and Quantification Studies

| Reagent/Category | Specific Examples | Function in Research | Application Context |

|---|---|---|---|

| Digestion Enzymes | Trypsin | Protein cleavage at specific sites for MS analysis | Sample preparation for bottom-up proteomics |

| Reducing Agents | Dithiothreitol (DTT) | Reduction of disulfide bonds | Protein denaturation before digestion |

| Alkylating Agents | Iodoacetamide (IAA) | Cysteine alkylation to prevent reformation | Sample preparation stabilization |

| Solid-Phase Extraction | C18 columns | Peptide purification and concentration | Sample clean-up before LC-MS/MS |

| Chromatography Columns | Hypersil GOLD C18 (2.1 mm × 150 mm, 1.9 µm) | Peptide separation | UPLC separation prior to MS detection |

| Mobile Phases | 0.1% Formic acid in water/acetonitrile | LC solvent system | Liquid chromatography gradient elution |

| Isobaric Labeling | TMT (Tandem Mass Tag) reagents | Multiplexed quantitative proteomics | Simultaneous quantification of multiple samples |

| Fluorescent Reporters | eGFP, GS-eGFP | Protein degradation tracking | Live-cell degradation kinetics measurement |

| Microinjection Markers | Fluorescently labeled dextran (10 kDa) | Injection volume quantification | Normalization in single-cell degradation assays |

The research reagents listed in Table 3 form the foundation of robust protein integrity verification protocols. Specifically, TMT labeling enables high-sensitivity parallel analysis of multiple samples [18], while fluorescent proteins like GS-eGFP serve as critical tools for quantifying degradation kinetics at single-cell resolution [19]. The integration of hierarchical clustering with high-resolution mass spectrometry creates a streamlined workflow that eliminates 80% of non-quantitative peptides while maintaining accurate quantification with recovery rates of 78-128% and RSD under 12% [17].

The integrity of mass spectrometry data in protein verification research faces multiple degradation pathways that require systematic mitigation. Non-perceptual colormaps introduce quantitative distortions that misrepresent protein distribution data, while methodological fragmentation creates analytical bottlenecks that compromise efficiency and reproducibility. Through implementation of perceptually uniform visualization schemes, accessibility-focused design principles, and streamlined analytical workflows, researchers can significantly enhance the reliability of protein integrity verification. These practices establish a foundation for robust, reproducible mass spectrometry methods that maintain data integrity throughout the drug development pipeline, ensuring that critical decisions regarding therapeutic protein characterization rest upon uncompromised analytical results.

In mass spectrometry-based protein integrity verification, two metrics stand as critical indicators of data quality and reliability: the Coefficient of Variation (CV) and Protein Sequence Coverage. For researchers and drug development professionals, these metrics provide the foundational evidence required to confirm protein therapeutic identity, purity, and stability. CV quantifies the precision of quantitative measurements across replicates, offering confidence in reproducibility for pharmacokinetic and biomarker studies. Sequence coverage comprehensively assesses protein identity and integrity by determining the percentage of amino acid sequences verified by detected peptides, thereby confirming the correct sequence of recombinant proteins and detecting potential modifications, degradations, or mutations. Within biopharmaceutical development, these parameters are indispensable for lot-release testing, biosimilar characterization, and stability studies, providing objective criteria for decision-making throughout the drug development pipeline.

Understanding and Calculating the Coefficient of Variation (CV)

Conceptual Foundation of CV

The coefficient of variation serves as a normalized measure of dispersion, enabling comparison of variability across datasets with different units or widely different means. In proteomics, CV calculates the ratio of the standard deviation to the mean of protein expression levels, expressed as a percentage. A lower CV indicates higher reproducibility and precision, which is paramount for reliable quantification in regulated bioanalysis [20]. This metric has gained renewed importance with technological advancements in high-throughput proteomics, where it frequently serves to benchmark the quantitative performance of new instruments, sample preparation workflows, and software tools [21].

Calculation Methods and Statistical Considerations

The standard formula for calculating CV is:

CV = (σ / μ) × 100%

where σ represents the standard deviation and μ denotes the mean of the measurements [20]. However, proteomics data presents specific statistical challenges due to its non-normal distribution. Raw intensity data is right-skewed, while log-transformed data approximates a normal distribution. This characteristic necessitates careful formula selection [21].

Table 1: CV Calculation Formulas for Proteomics Data

| Data Type | Appropriate Formula | Key Characteristics |

|---|---|---|

| Non-log-transformed Intensity | Base formula: CV = (σ / μ) × 100% | Applied directly to raw intensity values; preserves original data dispersion. |

| Log-transformed Intensity | Geometric formula: CV = √(e^(σ²_log) - 1) × 100% | σ_log is standard deviation of log-transformed data; provides comparable results to base formula on raw data. |

A critical error to avoid is applying the base CV formula to log-transformed data, which artificially compresses dispersion and can yield median CV values more than 14 times lower than the true variability, severely misrepresenting data quality [21].

Experimental Factors Influencing CV

Multiple experimental factors significantly impact calculated CV values, making transparency in methods reporting essential:

- Data Normalization: Systematic normalization procedures dramatically reduce CVs by removing technical bias. For example, median normalization can produce a "considerably lower" median CV compared to non-normalized data [21].

- Software Tools and Parameters: Default settings in common data processing tools like DIA-NN and Spectronaut often apply transformations that minimize data dispersion. For instance, selecting "High Precision" versus "High Accuracy" modes in DIA-NN can result in a 45% difference in median CV. Similarly, Spectronaut's default Top 3 filter and global normalization reduce CV by approximately 35% compared to disabled settings [21].

- Acquisition Methodology: Data-Independent Acquisition (DIA) generally provides lower CVs and higher reproducibility than Data-Dependent Acquisition (DDA) due to more consistent peptide sampling, making it particularly suitable for large-scale biomarker studies [22].

Achieving and Interpreting Protein Sequence Coverage

The Role of Sequence Coverage in Protein Verification

Protein sequence coverage represents the percentage of the total protein amino acid sequence detected and confirmed by identified peptides in a mass spectrometry experiment. It provides direct evidence of protein identity, completeness, and authenticity. In biopharmaceutical contexts, sequence coverage analysis is indispensable for confirming the intact expression of recombinant proteins, identifying sequence breakages or mutations, and providing critical data for biomarker discovery, disease diagnosis, and drug development [23]. High sequence coverage builds confidence that the target protein has been correctly synthesized and processed without unexpected alterations.

Methodologies for Maximizing Sequence Coverage

Achieving comprehensive sequence coverage, particularly 100% coverage, requires strategic method design beyond standard tryptic digestion:

- Multi-Enzyme Digestion Strategies: Employing various proteases with different cleavage specificities generates complementary peptide arrays that cover regions inaccessible to a single enzyme. Commonly used enzymes include Trypsin, Chymotrypsin, Asp-N, Pepsin, and Glu-C [23]. Combined digestions (e.g., Trypsin+Asp-N, Trypsin+Glu-C) further enhance coverage [23].

- Advanced Mass Spectrometry Platforms: High-resolution mass spectrometers, such as the Q Exactive Hybrid Quadrupole-Orbitrap and Orbitrap Fusion Lumos Tribrid systems, provide the precise mass measurements and fragmentation data required for confident peptide identification [23].

- Specialized Approaches for Challenging Proteins: Small membrane proteins and hydrophobic segments often require alternative approaches. Top-down MALDI-MS/MS techniques and solvent extraction-based purifications can sequence proteins inaccessible to conventional bottom-up methods [24]. Alternative proteases like chymotrypsin, elastase, or pepsin can generate peptides from transmembrane segments lacking basic residues [24].

Troubleshooting Incomplete Coverage

When sequence coverage falls below 100%, systematic investigation is necessary. Researchers should review protein data and sequences to identify undetected theoretical peptides, then determine whether alternative enzymatic treatments or methodological adjustments could recover missing regions [23]. For proteins with small molecular weights, high-concentration SDS-PAGE separation followed by in-gel digestion can improve detection [23]. In quantification experiments using surrogate peptides, selecting multiple signature peptides for each target protein enables cross-validation and improves quantification accuracy, as demonstrated in the quantification of Cry1Ab protein in genetically modified plants [25].

Integrated Experimental Protocols

Protocol for Determining Protein Sequence Coverage

This protocol outlines the steps for achieving comprehensive protein sequence coverage using multi-enzyme digestion.

Materials:

- Purified target protein (50-100 μg)

- Specific proteases (Trypsin, Chymotrypsin, Asp-N, Glu-C, etc.)

- High-resolution mass spectrometer (e.g., Q Exactive HF-X or Orbitrap Fusion Lumos)

- Liquid chromatography system (e.g., Easy-nLC 1200)

- Professional analysis software (e.g., MaxQuant, DIA-NN, Spectronaut)

Procedure:

- Sample Preparation: Divide the target protein sample into aliquots for separate enzymatic digestions.

- Multi-Enzyme Digestion: Digest each aliquot with different specific proteases (e.g., Trypsin, Chymotrypsin, Asp-N) under optimal conditions for each enzyme.

- LC-MS/MS Analysis: Analyze digested peptides using high-resolution LC-MS/MS with data-dependent or data-independent acquisition.

- Database Search: Identify peptides against the target protein sequence using professional analysis software with appropriate false discovery rate controls (typically ≤1%).

- Sequence Assembly: Combine identification results from multiple enzymatic digestions to reconstruct complete protein sequence information.

- Coverage Calculation: Calculate sequence coverage as (number of amino acids in detected peptides / total number of amino acids in protein) × 100%.

Troubleshooting Tips:

- If coverage remains incomplete after multi-enzyme digestion, consider combining enzymes in simultaneous digestion.

- For membrane proteins, incorporate organic solvent extraction and alternative proteases.

- For low-abundance proteins, implement affinity enrichment prior to digestion.

Protocol for Precision Assessment Using CV

This protocol describes the systematic evaluation of quantitative precision in proteomic experiments using coefficient of variation.

Materials:

- Biological or technical replicates (minimum n=3, recommended n=5-6)

- Stable isotope-labeled internal standards (when applicable)

- Statistical computing environment (e.g., R with proteomicsCV package)

Procedure:

- Experimental Design: Prepare and process sufficient replicates to support robust variability assessment (minimum 3 for validation, 5-6 for discovery studies).

- Data Acquisition: Analyze all replicates using identical LC-MS/MS conditions within a randomized sequence to minimize batch effects.

- Data Preprocessing: Apply consistent normalization procedures while retaining non-log-transformed intensity data for CV calculation.

- CV Calculation:

- For non-log-transformed intensity data: Use base formula CV = (σ / μ) × 100%

- For log-transformed intensity data: Use geometric formula CV = √(e^(σ²_log) - 1) × 100%

- Precision Assessment: Calculate CVs for each protein across replicates, then determine median CV across all quantified proteins as an overall quality metric.

- Data Reporting: Document all normalization procedures, transformation steps, and the specific CV formula used in methods sections.

Troubleshooting Tips:

- If CVs are higher than expected, examine raw data for outliers and check normalization procedures.

- For experiments comparing multiple conditions, ensure consistent CV calculation methodology across all groups.

- Use the R package "proteomicsCV" for standardized calculations [21].

Applications in Protein Therapeutic Development

Regulatory Considerations and Validation Requirements

For protein therapeutics development, regulatory guidelines establish specific acceptance criteria for quantitative bioanalytical methods. The AAPS Bioanalytical Focus Group recommends validation parameters that blend considerations from both small molecule and protein ligand-binding assays [26].

Table 2: Validation Acceptance Criteria for Protein LC-MS/MS Bioanalytical Methods

| Validation Parameter | Small Molecule LC-MS/MS | Protein LBA | Protein LC-MS/MS (Recommended) |

|---|---|---|---|

| Lower Limit of Quantification | Within ±20% | Within ±25% | Within ±25% |

| Calibration Standards | Within ±15% (except LLOQ) | Within ±20% (except LLOQ/ULOQ) | Within ±20% (except LLOQ) |

| Accuracy and Precision | Within ±15% (LLOQ ±20%)Minimum 3 runs | Within ±20% (LLOQ/ULOQ ±25%)Minimum 6 runs | Within ±20% (LLOQ ±25%)Minimum 3 runs |

| Selectivity/Specificity | 6 matrix lots;Blanks <20% of LLOQ | 10 matrix lots;LLOQ accuracy within ±25% for 80% of lots | 6-10 matrix lots;Blanks <20% of LLOQLLOQ accuracy within ±25% for 80% of lots |

| Matrix Effect | IS-normalized CV ≤15% across 6 lots | Not Applicable | IS-normalized CV ≤20% across 6-10 lots |

These validation parameters provide a framework for establishing assays that support non-clinical toxicokinetic and clinical pharmacokinetic studies, with the understanding that method requirements should be tailored to the specific protein therapeutic, intended study population, and analytical challenges [26].

Case Studies in Biopharmaceutical Analysis

Biosimilarity Assessment: Comprehensive sequence coverage analysis provides critical evidence for biosimilar development by verifying identical primary structure to the reference product. Multi-enzyme digestion approaches achieving 100% sequence coverage can confirm amino acid sequence identity, while high-precision quantification (CV < 15%) ensures consistent expression levels across manufacturing batches [23].

Antibody-Drug Conjugate Characterization: For ADCs, sequence coverage verifies the integrity of the antibody scaffold, while CV measurements ensure precise quantification of drug-to-antibody ratio (DAR) and payload distribution. Specialized digestion protocols may be required to characterize conjugation sites and confirm the absence of sequence variants that could impact binding or efficacy.

Biomarker Verification: In clinical proteomics, CV values help identify reliable biomarkers from discovery datasets. Proteins with low CVs across technical and biological replicates demonstrate consistent quantification, increasing confidence in their validity. Sequence coverage provides additional confirmation of biomarker identity, which is particularly important for distinguishing between protein isoforms with high sequence homology [27].

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 3: Essential Research Reagents and Materials for Protein Quantification Studies

| Category | Specific Examples | Function and Application |

|---|---|---|

| Mass Spectrometry Systems | Q Exactive HF-X, Orbitrap Fusion Lumos, timsTOF | High-resolution accurate mass measurement for peptide identification and quantification [23] [22]. |

| Liquid Chromatography | Easy-nLC 1200, NanoLC systems | High-pressure nanoscale separation of complex peptide mixtures [23]. |

| Proteolytic Enzymes | Trypsin, Chymotrypsin, Asp-N, Glu-C, Pepsin | Generate complementary peptide patterns for comprehensive sequence coverage [23] [24]. |

| Sample Preparation Kits | Proteograph Product Suite, Immunoaffinity Magnetic Beads | Compress dynamic range, enrich low-abundance proteins, and remove interfering substances [12] [25]. |

| Data Analysis Software | DIA-NN, Spectronaut, Skyline, MaxQuant | Process raw MS data, identify peptides, quantify proteins, and calculate quality metrics [21] [28]. |

| Internal Standards | Stable Isotope-Labeled (SIL) Peptides/Proteins | Normalize technical variation and enable absolute quantification [26] [25]. |

| Statistical Tools | R package "proteomicsCV", Perseus, MSstats | Standardize CV calculations and perform statistical analysis of quantitative data [21]. |

The integrated application of sequence coverage and coefficient of variation provides a robust framework for protein quantification and verification in mass spectrometry-based analyses. Sequence coverage delivers comprehensive information about protein identity and integrity, while CV quantifies measurement precision and reproducibility. Together, these metrics form the foundation for reliable protein characterization throughout the biopharmaceutical development pipeline—from initial discovery through clinical trials and quality control. As mass spectrometry technologies continue to evolve with improved sensitivity, throughput, and data analysis capabilities, the strategic implementation of these essential metrics will remain fundamental to advancing protein therapeutics and biomarker research with the rigor required for regulatory approval and clinical implementation.

The Critical Role of Integrity Verification in Drug Development and Biomarker Discovery

Integrity verification forms the foundational pillar of modern drug development and biomarker discovery, ensuring that data generated throughout the research lifecycle is reliable, reproducible, and regulatory-compliant. In the context of mass spectrometry-based protein analysis, integrity encompasses multiple dimensions—from sample integrity during collection and processing to data integrity throughout acquisition and interpretation. The evolution of proactive health management paradigms has shifted focus from traditional disease diagnosis to prediction and prevention, placing increased emphasis on biomarker-driven models that require rigorous verification protocols [29]. This transition, coupled with advancements in high-throughput proteomics and multi-omics integration, demands robust frameworks that maintain analytical veracity from benchtop to clinical application.

The critical importance of integrity verification is magnified in regulated pharmaceutical environments where data integrity breaches can compromise patient safety and therapeutic efficacy. Regulatory agencies including the FDA and EMA have intensified scrutiny of data management practices, with nearly 80% of data integrity-related warning letters occurring in a recent five-year period [30]. The convergence of mass spectrometry technologies with structured integrity frameworks creates a synergistic relationship that accelerates biomarker discovery while maintaining the stringent evidentiary standards required for regulatory approval and clinical implementation.

Biomarker Verification Applications in Disease Research

Protein biomarker verification serves critical functions across the drug development continuum, from early target identification to clinical trial enrichment and therapeutic monitoring. The integration of mass spectrometry-based proteomic analysis has revealed substantial biomarker panels across diverse disease states, providing insights into pathological mechanisms and potential intervention points.

Comprehensive Proteomic Signatures in Neuromuscular Disease

Recent large-scale proteomic studies have demonstrated the power of systematic biomarker verification in elucidating disease pathophysiology. A 2025 investigation of Duchenne muscular dystrophy (DMD) quantified 7,289 serum proteins using SomaScan proteomics in corticosteroid-naïve patients, identifying 1,881 significantly elevated and 1,086 significantly decreased proteins compared to healthy controls [31]. This extensive profiling substantially expanded the catalog of circulating biomarkers relevant to muscle pathology, with independent cohort validation showing remarkable consistency (Spearman r = 0.85) [31].

Table 1: Key Protein Biomarker Categories Identified in DMD Research

| Biomarker Category | Representative Proteins | Fold Change in DMD | Biological Significance |

|---|---|---|---|

| Muscle Injury Biomarkers | Alpha-actinin-2 (ACTN2), Myosin binding protein C (MYBPC1), Creatine kinase-M type (CKM) | 151×, 86×, 54× | Sarcomere disruption, muscle fiber leakage, disease activity monitoring |

| Mitochondrial Enzymes | Succinyl-CoA:3-ketoacid-coenzyme A transferase 1 (SCOT), Enoyl-CoA delta isomerase 1 | 21×, 8.7× | Metabolic dysregulation, bioenergetic impairment |

| Extracellular Matrix Proteins | 45 elevated, 92 decreased proteins | Variable | Fibrosis, tissue remodeling, disease progression |

| Novel Muscle Factors | Kelch-like protein 41 (KLHL41), Ankyrin repeat domain-containing protein 2 (ANKRD2) | 19×, 22× | Regeneration pathways, emerging therapeutic targets |

The biological validation of these findings through correlation with muscle mRNA expression datasets further strengthened the evidence for their pathological relevance, creating a robust foundation for clinical translation [31]. This systematic approach to biomarker verification—spanning discovery, analytical validation, and biological confirmation—exemplifies the rigorous methodology required for meaningful biomarker implementation in drug development pipelines.

Cancer Metabolomics and Spatial Resolution

In oncology, MALDI mass spectrometry imaging (MALDI-MSI) has emerged as a powerful platform for spatial metabolite detection, enabling visualization of metabolic heterogeneity within tumor microenvironments. This technology has identified stage-specific metabolic fingerprints across breast, prostate, colorectal, lung, and liver cancers, providing functional insights into tumor biology [32]. The ability to map thousands of metabolites at near single-cell resolution has revealed metabolic alterations linked to hypoxia, nutrient deprivation, and therapeutic resistance [32].

Technological advancements including advanced matrices, on-tissue derivatization, and MALDI-2 post-ionization have significantly improved sensitivity, metabolite coverage, and spatial fidelity, pushing the boundaries of cancer metabolite detection [32]. The integration of MALDI-Orbitrap and Fourier-transform ion cyclotron resonance (FT-ICR) platforms has further enhanced mass accuracy and resolution, enabling more confident biomarker identification [32]. These capabilities position mass spectrometry as an indispensable tool for verifying metabolic integrity in cancer research and therapeutic development.

Mass Spectrometry Methodologies for Integrity Verification

Mass spectrometry platforms provide versatile technological foundations for integrity verification across analyte classes, from small molecule metabolites to intact proteins. Understanding the capabilities and applications of these platforms is essential for appropriate methodological selection in drug development and biomarker verification workflows.

Metabolomics Workflows and Platform Selection

Mass spectrometry-based metabolomics comprehensively studies small molecules in biological systems, offering deep insights into metabolic profiles [33]. The integrity of metabolomic data begins with appropriate sample collection and processing, where rapid quenching of metabolism and efficient metabolite extraction are critical for preserving biological fidelity [33]. Liquid-liquid extraction methods using solvents like methanol/chloroform mixtures enable partitioning of polar and non-polar metabolites, while internal standards compensate for technical variability and enhance quantification accuracy [33].

Table 2: Mass Spectrometry Platforms for Biomarker Integrity Verification

| Platform Type | Key Applications | Strengths | Recent Technological Advances |

|---|---|---|---|

| LC-ESI-MS/MS | Quantitative proteomics, targeted metabolite analysis | Broad metabolite coverage, excellent for polar metabolites, high sensitivity | Evosep Eno LC system (500 samples/day), Thermo Orbitrap Astral Zoom (30% faster scan speeds) [4] |

| MALDI-MSI | Spatial metabolomics, tissue imaging, cancer metabolomics | Preservation of spatial information, rapid analysis, minimal sample preparation | MALDI-2 post-ionization, integration with Orbitrap/FT-ICR, machine learning data analysis [32] |

| Top-Down Proteomics | Intact protein analysis, proteoform characterization, PTM mapping | Comprehensive protein characterization, avoids inference limitations | Bruker timsTOF with ion enrichment mode, Thermo Orbitrap Excedion Pro with alternative fragmentation [4] |

| High-Resolution Benchtop Systems | Routine analysis, quality control, clinical applications | Space efficiency, operational simplicity, reduced resource consumption | Waters Xevo Absolute XR (6× reproducibility), Agilent Infinity Lab ProIQ [4] |

The selection between MALDI and ESI platforms depends on analytical requirements. While ESI-LC-MS offers broad metabolite coverage and is ideal for polar metabolites, MALDI provides superior spatial resolution and rapid analysis without chromatographic separation [32]. MALDI's ability to produce single-charged ions simplifies spectral interpretation, reducing complexity and enhancing detection clarity for metabolites [32]. For protein analysis, the field is witnessing a transition from bottom-up to top-down proteomic approaches that preserve intact protein information, enabling comprehensive characterization of proteoforms and post-translational modifications that are critical for functional biology [4].

Experimental Protocol: LC-MS/MS-Based Protein Biomarker Verification

The following protocol outlines a standardized approach for protein biomarker verification using liquid chromatography-tandem mass spectrometry:

Sample Preparation and Quality Control

- Collect biological samples (serum, plasma, tissue) using standardized protocols to minimize pre-analytical variability [27]. For serum samples, allow clotting for 30 minutes at room temperature before centrifugation at 2,000 × g for 10 minutes.

- Aliquot and flash-freeze samples in liquid nitrogen within 60 minutes of collection. Store at -80°C until analysis [33].

- Perform protein precipitation and digestion using filter-aided sample preparation (FASP) or in-solution digestion protocols. Add stable isotope-labeled standard peptides for quantification [27].

- Validate sample quality using reference standards and quality control pools. Exclude samples with significant degradation or outlier behavior in quality metrics [27].

Liquid Chromatography Separation

- Utilize nanoflow or microflow LC systems with trap-column configurations for online desalting and separation.

- Employ reversed-phase C18 columns (75μm × 25cm, 1.6-2.0μm particle size) with gradient elution (90-240 minutes) using water/acetonitrile/0.1% formic acid mobile phases [27].

- Maintain column temperature at 40-55°C to enhance separation efficiency and reproducibility.

Mass Spectrometry Data Acquisition

- Operate mass spectrometer in data-dependent acquisition (DDA) or data-independent acquisition (DIA) mode. For targeted verification, use parallel reaction monitoring (PRM) for enhanced sensitivity and reproducibility [27].

- Set mass resolution to ≥35,000 (at m/z 200) for MS1 and 17,500 for MS2 scans. Use normalized collision energy of 25-35% for HCD fragmentation [27].

- Implement real-time calibration and mass correction using reference compounds.

Data Processing and Integrity Assessment

- Process raw data using specialized software (MaxQuant, Skyline, Progenesis QI) for peak detection, alignment, and quantification [27].

- Apply stringent false discovery rate (FDR) thresholds (<1%) for protein identification using target-decoy approaches [27].

- Verify data quality through metrics including retention time stability (<0.5% CV), mass accuracy (<5 ppm error), and intensity correlation (r > 0.9) across technical replicates [27].

Data Integrity Frameworks and Regulatory Compliance

Robust data integrity frameworks are essential components of integrity verification in regulated drug development environments. The ALCOA++ principles provide a comprehensive framework for ensuring data integrity throughout the biomarker discovery and validation lifecycle [30].

ALCOA++ Implementation in Analytical Workflows

The expanded ALCOA++ principles encompass ten attributes that collectively ensure data integrity from generation through archival:

- Attributable: Link all data to the person or system that created or modified it, using unique user IDs and appropriate access controls [30].

- Legible: Ensure data remains readable and reviewable in its original context, with reversible encoding where applicable [30].

- Contemporaneous: Record data at the time of activity with automatically captured date/time stamps synchronized to external standards [30].

- Original: Preserve the first capture or certified copy created under controlled procedures, maintaining dynamic source data where relevant [30].

- Accurate: Faithfully represent what occurred through validated coding, transfers, and interfaces with calibrated devices [30].

- Complete: Retain all data, metadata, audit trails, and contextual information needed to reconstruct events [30].

- Consistent: Maintain standardized definitions, units, and sequencing across the data lifecycle with aligned time stamps [30].

- Enduring: Preserve data intact and usable for the entire retention period with suitable formats and backups [30].

- Available: Ensure data retrievability for monitoring, audits, and inspections throughout the retention period [30].

- Traceable: Enable end-to-end tracking of data and metadata changes through comprehensive audit trails [30].

Integrated Informatics Infrastructure

Connected laboratory informatics systems significantly enhance data integrity by automating data transfer and reducing manual intervention points. Integration between Laboratory Information Management Systems (LIMS) and Chromatography Data Systems (CDS) creates streamlined workflows that minimize transcription errors and improve traceability [34]. In a non-integrated environment, manual steps for sample information transfer, result calculation, and data entry introduce multiple opportunities for error, while integrated environments enable automated data exchange at defined decision points [34].

Modern informatics solutions provide configurable control over data transfer timing—from immediate post-acquisition transfer to end of full review and approval cycles—allowing organizations to align digital workflows with evolving SOP requirements [34]. This integrated approach facilitates compliance with 21 CFR Part 11 and Annex 11 regulations while improving operational efficiency through reduced manual processes and streamlined training requirements [34].

Research Reagent Solutions for Biomarker Verification

The reliability of biomarker verification studies depends heavily on appropriate selection and quality of research reagents. Consistent quality across reagent batches ensures analytical reproducibility and minimizes technical variability in mass spectrometry-based assays.

Table 3: Essential Research Reagents for Mass Spectrometry-Based Biomarker Verification

| Reagent Category | Specific Examples | Function and Application | Quality Considerations |

|---|---|---|---|

| Sample Preparation | Methanol, chloroform, acetone, acetonitrile | Metabolite extraction, protein precipitation, lipid isolation | LC-MS grade, low background contamination, consistent purity between lots [33] |

| Digestion Enzymes | Trypsin, Lys-C, Asp-N | Protein cleavage for bottom-up proteomics, sequence-specific digestion | Sequencing-grade, MS-compatible, minimal autolysis, validated activity [27] |

| Internal Standards | Stable isotope-labeled peptides, metabolite analogs | Quantification standardization, technical variability compensation | >97% isotopic enrichment, chemical purity, stability in matrix [33] [27] |

| Ionization Matrices | CHCA, SA, DHB, DHB/HA, 9-AA | Laser energy absorption, analyte desorption/ionization in MALDI | High purity, appropriate crystal structure, low background interference [32] |

| Chromatography | C18, C8, HILIC, ion exchange columns | Analyte separation, resolution enhancement, interference removal | Column certification, stable performance, minimal carryover [27] |

| Calibration Solutions | ESI tuning mix, sodium formate clusters | Mass accuracy calibration, instrument performance verification | Certified reference materials, traceable concentrations [27] |

Integrity verification represents a critical nexus between technological innovation, analytical rigor, and regulatory compliance in drug development and biomarker discovery. The integration of advanced mass spectrometry platforms with structured data integrity frameworks creates a robust foundation for generating reliable, actionable scientific evidence. As the field progresses toward increasingly complex multi-omics integration and personalized medicine approaches, the principles of integrity verification will continue to ensure that biomarker data maintains the evidentiary standard required for confident clinical decision-making. The continued evolution of mass spectrometry technologies—particularly in top-down proteomics, spatial metabolomics, and integrated informatics—promises to enhance both the depth of biological insight and the robustness of verification methodologies, ultimately accelerating the translation of biomarker discoveries into clinical applications that improve patient outcomes.

Advanced MS Workflows in Action: From Sample Prep to Specific Applications

In mass spectrometry-based protein integrity verification research, the accuracy and reliability of results are fundamentally dependent on the quality of sample preparation. This initial phase of the proteomics workflow is critical for ensuring that proteins are efficiently extracted, digested, and cleaned up for subsequent LC-MS/MS analysis. Proper sample preparation directly impacts protein identification, quantification accuracy, and the detection of post-translational modifications, all of which are essential for biopharmaceutical characterization and quality control [10] [2]. The complexity of biological samples, combined with the vast dynamic range of protein concentrations, presents significant challenges that can only be overcome through optimized, reproducible preparation methods [10]. With regulatory agencies increasingly supporting mass spectrometry as a reliable tool for quality control in drug manufacturing, standardized sample preparation protocols have become more important than ever for ensuring consistent, high-quality results in protein integrity studies [2].

Critical Evaluation of Sample Preparation Methods

Method Selection Criteria

Choosing an appropriate sample preparation method requires careful consideration of multiple factors, including sample type, protein quantity, and specific research objectives. For mass spectrometry-based protein integrity verification, key selection criteria include compatibility with downstream MS analysis, reproducibility, recovery efficiency for low-abundance proteins, and practicality for the laboratory setting. The method must effectively remove interferents such as detergents and salts while maintaining protein representativity and enabling efficient digestion [10] [35]. Sample complexity and the need for specialized analyses such as phosphoproteomics or membrane protein characterization further influence method selection, as different protocols exhibit distinct strengths and limitations for specific applications [36] [37].

Comparative Analysis of Primary Methods

Table 1: Comparative Analysis of Sample Preparation Methods for Mass Spectrometry

| Method | Key Principle | Advantages | Limitations | Optimal Use Cases |

|---|---|---|---|---|

| Filter-Aided Sample Preparation (FASP) | Ultrafiltration to remove contaminants & on-membrane digestion [35] [37] | Effective SDS removal; compatibility with complex samples; high protein identification rates [35] [37] | Time-consuming; potential peptide loss; higher cost; not ideal for low-sample amounts [37] | Barley leaves; Arabidopsis thaliana leaves; samples requiring thorough detergent removal [35] [37] |

| Single-Pot Solid-Phase-Enhanced Sample Preparation (SP3) | Paramagnetic beads for protein binding, cleanup, & on-bead digestion [37] | Fast processing; minimal handling; compatible with detergents; works with low protein amounts; cost-effective [37] | Requires optimized bead-to-protein ratio; performance varies with bead type (carboxylated vs. HILIC) [37] | Arabidopsis thaliana lysates; low-input samples; high-throughput applications [37] |

| Acid-Assisted Methods (SPEED) | Trifluoroacetic acid (TFA) for protein extraction & digestion without detergents [38] [39] | Rapid & simple workflow; minimal steps; enhanced proteome coverage for challenging samples; avoids detergent complications [38] [39] | Acid conditions may not be suitable for all applications; may not disrupt crosslinks in some matrices [39] | Human skin samples; tape-strip proteomics; crosslinked extracellular matrices; challenging samples [39] |

| In-Solution Digestion (ISD) | Direct digestion in solution after protein extraction & cleanup [35] | Simplicity; applicable to various sample types; amenable to automation [35] | Potential incomplete digestion; may require cleanup steps to remove interferents [35] | Barley leaves (OP-ISD protocol showed best performance in this category) [35] |

| S-Trap | Protein suspension trapping in quartz filter for cleanup & digestion [36] | Efficient detergent removal; good recovery; applicable to small-scale samples [36] | Limited sample capacity; specialized equipment required [36] | Neuronal tissues (trigeminal ganglion); limited tissue samples [36] |

Detailed Experimental Protocols

SP3 Protocol for Plant and General Protein Samples

The SP3 protocol represents a significant advancement in sample preparation technology, particularly valuable for its compatibility with detergents and applicability to low-input samples. The following optimized protocol is adapted for plant tissues but can be modified for other sample types [37]:

Materials: SDT lysis buffer (4% SDS, 100 mM DTT, 100 mM Tris-HCl, pH 7.6); Sera-Mag Carboxylate-Modified magnetic beads; binding solution (90% ethanol, 5% water, 5% acetic acid); 50 mM TEAB; trypsin; and standard laboratory equipment including a thermomixer and magnetic rack [37].

Procedure:

- Protein Extraction: Homogenize 1 g of frozen plant tissue powder in 1 ml of hot SDT buffer. Incubate at 95°C for 5-10 minutes with continuous shaking [37].

- Clarification: Centrifuge the lysate at 16,000 × g for 10 minutes. Transfer the supernatant to a new tube and determine protein concentration [37].

- Bead-Based Capture: Transfer 100 μg of protein extract to a low-binding tube. Add magnetic beads (10:1 bead-to-protein ratio) and binding solution to achieve final ethanol concentration >50%. Incubate for 10 minutes at room temperature with shaking [37].

- Washing: Place the tube on a magnetic rack until the solution clears. Remove supernatant. Wash beads twice with 70% ethanol, then once with acetonitrile. Briefly air-dry the beads [37].

- Digestion: Resuspend beads in 50 mM TEAB containing trypsin (1:50 enzyme-to-protein ratio). Incubate at 37°C for 2-4 hours with shaking [37].

- Peptide Recovery: Add trifluoroacetic acid to 1% final concentration. Place tube on magnetic rack and transfer the cleared supernatant containing peptides to a new vial for LC-MS/MS analysis [37].

This optimized SP3 protocol completes in approximately 2 hours and demonstrates excellent performance for a wide range of protein inputs without requiring adjustment of bead amount or digestion parameters [37].

SPEED Protocol for Challenging and Crosslinked Samples

The SPEED (Sample Preparation by Easy Extraction and Digestion) protocol offers a detergent-free approach that is particularly effective for challenging samples such as skin tissues and other crosslinked matrices. This method utilizes acid extraction for complete sample dissolution [38] [39]:

Materials: Pure trifluoroacetic acid (TFA); triethylammonium bicarbonate (TEAB); trypsin; and standard laboratory equipment [38] [39].

Procedure:

- Acid Extraction: Add pure TFA to the sample (e.g., skin tissue) at an approximate 1:10 ratio (sample:TFA). Vigorously vortex to ensure complete dissolution. For tough tissues, brief sonication may be applied [39].

- Neutralization: Add appropriate volume of TEAB to neutralize the acidified solution. The final pH should be approximately 7.5-8.5, optimal for tryptic digestion [38].

- Digestion: Add trypsin (1:50 enzyme-to-protein ratio). Incubate at 37°C for 4-6 hours or overnight [38].

- Acidification and Cleanup: Add TFA to 0.1-1% final concentration to stop digestion. Desalt peptides using C18 solid-phase extraction if necessary before LC-MS/MS analysis [38].

The SPEED protocol has demonstrated superior performance for challenging samples, increasing identified protein groups to over 6,200 in healthy human skin samples compared to conventional methods [39].

Optimized Protocol for Limited Tissue Samples

For precious or limited samples such as neuronal tissues, an optimized workflow maximizes protein and phosphopeptide recovery [36]:

Materials: Lysis buffer (5% SDS); S-Trap micro columns; dithiothreitol (DTT); iodoacetamide (IAA); trypsin; phosphoric acid; formic acid; and standard centrifugation equipment [36].

Procedure:

- Protein Extraction: Homogenize tissue (e.g., trigeminal ganglion) in 100 μl of 5% SDS lysis buffer at room temperature. Boil the homogenate for 2 minutes, then centrifuge at 14,000 × g for 10 minutes. Collect supernatant [36].

- Reduction and Alkylation: Take 100 μg protein aliquot. Add DTT to 2 mM final concentration and incubate at 56°C for 30 minutes. Then add IAA to 5 mM final concentration and incubate at room temperature for 45 minutes in the dark [36].

- Acidification and S-Trap Processing: Add 12% phosphoric acid at 1:10 (v/v) ratio. Add binding/wash buffer (6:1 ratio to acidified solution). Load mixture onto S-Trap column and centrifuge at 4,000 × g for 1-2 minutes [36].

- Digestion: Add trypsin solution in 50 mM TEAB to the column. Incubate at 47°C for 1 hour. Centrifuge to collect peptides, followed by additional elution steps [36].

- Phosphopeptide Enrichment (Optional): For phosphoproteomics, subject digested peptides to sequential enrichment using Fe-NTA magnetic beads followed by TiO2-based method to maximize phosphopeptide recovery [36].

This specialized protocol has been successfully applied to tiny neuronal tissues (0.1g mouse trigeminal ganglion), significantly enhancing yield for both proteomic and phosphoproteomic analyses [36].

Workflow Visualization

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 2: Essential Research Reagents and Materials for Sample Preparation

| Category | Specific Reagent/Kit | Function | Application Notes |

|---|---|---|---|

| Lysis Reagents | 5% SDS Lysis Buffer [36] | Protein extraction & denaturation | Optimal for neuronal tissues; use at room temperature to prevent precipitation [36] |

| SDT Buffer (4% SDS, 100 mM DTT) [37] | Comprehensive protein extraction | Effective for plant tissues with cell walls; requires heating [37] | |

| Pure Trifluoroacetic Acid (TFA) [38] | Acid-based extraction | Detergent-free alternative; ideal for crosslinked samples like skin [38] [39] | |

| Reduction/Alkylation | Dithiothreitol (DTT) [36] | Disulfide bond reduction | Standard concentration: 2-10 mM; incubate at 56°C for 30 min [36] |

| Tris(2-carboxyethyl)phosphine (TCEP) [35] | Alternative reducing agent | More stable than DTT; effective at lower concentrations [35] | |